Howdy, fellow coders! Today, I want to talk about a tool that has been making waves in the programming community lately - Github Co-Pilot and that I have been reviewing for about a month now. As a software developer, you know that coding can be a challenging and...

My Linux Toolbox ’22

Having completed my review of the System 76 and HP Dev One Linux-powered developer laptop last week, I got some request for what my work stack is like on Linux compared to what it was on macOS. Some of these applications I use on both systems but am listing anyway...

Pallet Town: SQLAlchemy Performance I

Readers of this blog and Coder Radio listeners will now that I have fallen for the snake and by that I of course mean Python! Typing that out cracks my little Ruby heart but for reasons that I’ve explained at length on the show The Mad Botter has moved to Python as...

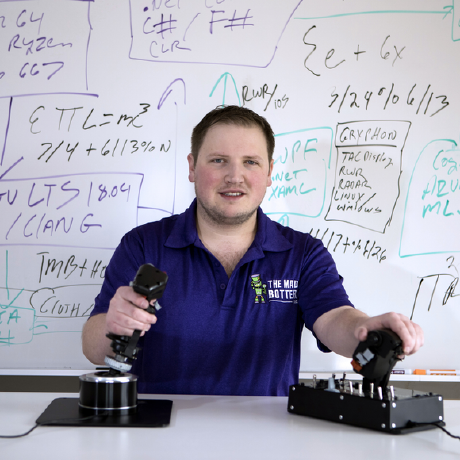

Why TMB Chose C++ in ’21

I don't normally enjoy writing controversial posts about why new technologies are bad; I LOVE it! So here we go! We're starting a new large-scale first-party project over at The Mad Botter (sorry no details today) that requires a significant level of low-level...

Ruby 3 Typing

Soutaro Matsumoto and the Ruby team over at Square have a proposal for typing in Ruby 3. My initial reaction was a befuddled sort of confusion as though I were a dog who woke up with a human's hands. It took several readings for me to understand why Ruby developers...

JSON & JSONB in Active Record & PostgreSQL

A quick look at Active Record JSON & JSONB.

More from Mike:

How to Create a Private Ruby Gem

Ruby Gems are the...

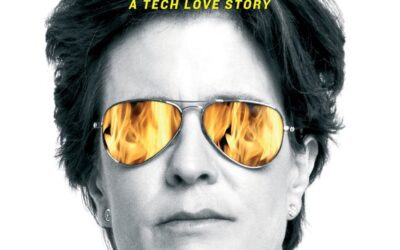

Burn Book Review

In the world of...

PWAs in 2024

Progressive Web...

Fly.io VS Render VS Dokku – Fight!

There's never been...