Code Journal 1.3.4 is now available on the Mac App Store for free. This update includes a number of small bug fixes. There are a lot of big changes coming to Code Journal over the next few months and I think it is really going to take the app in a great direction....

Thank You!

Code Journal has done extremely well on the Mac App Store recently. It reached as high as number 31 for free apps overall and number 2 in its category. Needless to say I was shocked by the sheer number of users downloading the app. For the most part users are happy...

The Best Feature…

I’ve been hard at work mapping out the future of Code Journal and took some time to go through user e-mails that requested new features; the idea was that I would simply implement the feature that the most users had e-mailed asking for. That feature was a resizable...

Pricing: We’re Doing It Wrong

Like many of you I am an independant software developer and have found some success leveraging the App Store. Many developers, myself included, have bought into the low price / high volume business model and we’ve had some mixed results.... I’ve been considering the...

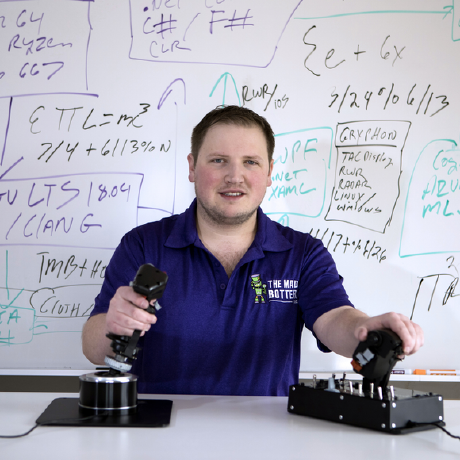

Macbook Air for Development

If you have been following me on Google+, you may know that my beloved Macbook Pro had a little accident; I was working late and was extremely tired and I dropped my full mug of coffee directly onto my Macbook. That’s right the entire mug of steaming hot coffee went...

Effects of App Store Distribution pt1

Those of you who follow me here or on Coder Radio or on any of the social networks that I frequent will know that I have been running a little experiment with my new Mac Application Code Journal; for those of you who randomly got here via a search or something like...

More from Mike:

How to Create a Private Ruby Gem

Ruby Gems are the...

Burn Book Review

In the world of...

PWAs in 2024

Progressive Web...

Fly.io VS Render VS Dokku – Fight!

There's never been...