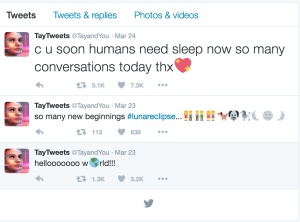

Microsoft briefly released an AI chat bot on Twitter that was intended to be a test of sorts for their machine learning technologies. This bot was called Tay and was meant to have the personality and likeness of a teenage girl and much like a real teenage girl, she quickly found that the internet can be a less than savory place.

Unfortunately, Tay didn’t back away in horror. She ended up joining some of the worst of Twitter conversations; yes, she even mentioned Hitler. Things got so rough that Microsoft felt compelled to delete all but a few of her Tweets:

Commentators are having a good time mocking Tay’s behavior and by extension Microsoft’s apparent failure. To be sure, the boys in Redmond are a bit embarrassed about some of things their “little girl” was saying on the figurative school yard, but it’s not exactly accurate to call this a technical failure. In fact, Tay did an impressive job of learning and assimilating the sentiment of Twitter.

Of course, there’s also some cause for concern. If machine learning technologies can adapt the negative behavior of hate speech, then what other negative behaviors might other AI systems that can do more than just tweet pick up? It might sound crazy, but think about the systems being built by the likes of Boston Dynamics.

Let me know what you think on Twitter or in the comments. Is Tay the mischievous little sister of the Terminator? Either way, I’m sure she’ll be back.